EmberVision: Visual early Fire-detection sensor

In 2023 alone, Fire damage caused 10,190 civilian injuries (www.nfpa.org) and $11 billion in property damage. Having an early warning of flames or smoke in the critical moments before a traditional detector can trigger, especially if no people are present, can prevent damage and the spread of fire – saving property, lives, and first responders.

Why?

Traditional smoke detectors can delay fire detection in many scenarios when smoke must travel long distances, leading to damage to belongings and more lost lives as flames quickly become harder to control. This research presents EmberVision, a low-cost vision-based fire and smoke sensor that uses machine learning on microcontrollers to perform detection with minimal power consumption, no security concerns, and at a low cost.

Model Development

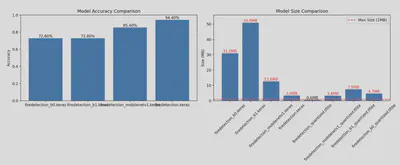

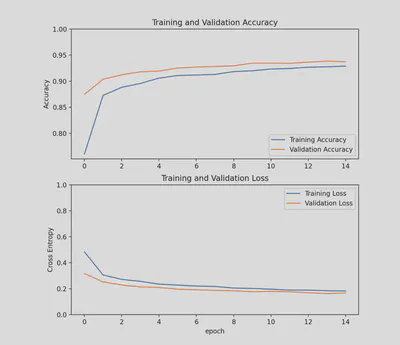

A unified fire and smoke dataset was created from 3 sources with 17,000 images, which was then used to train and optimize a scaled-down MobileNetV2-based binary classification CNN and comparative models based on various other known architectures. The best model achieved 94.4% accuracy pre-quantization, and after fine tuning with quantization aware training (QAT) and applying dynamic range quantization it maintained 90.5% accuracy with an 82% reduction of size to 616KB.

The novel machine learning pipeline integrating QAT and INT8 quantization allowed these models to be deployed on the resource-constrained ESP32-S3 microcontrollers. My approach involved implementing a two-stage training process: first training the model with standard techniques, then continuing with QAT epochs that simulate quantization effects during training. This allowed the model to learn compensation strategies for quantization-induced noise while maintaining full-precision gradients for optimal learning. I also integrated class-balanced weighting to address dataset imbalances and carefully curated representative datasets for the quantization process.

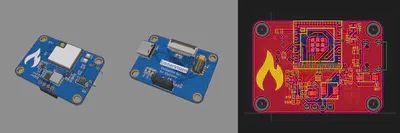

Device Design and Manufacturing

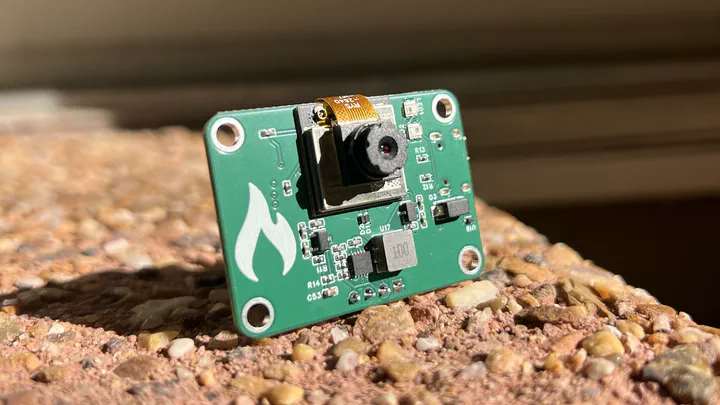

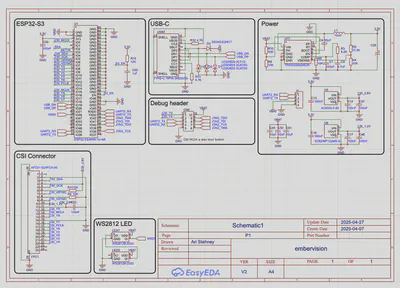

A custom PCB module was then designed to run the model, consuming only 65mA during operation and with easy connectors to integrate it into a larger device. This module demonstrates the feasibility of an inexpensive visual early fire detection solution for the home that was traditionally unavailable but also acts as an blueprint of a way to deploy distributed machine learning on low-power devices to bring machine learning back from the cloud.

The board contains the ESP32-S3-MINI-1U-N4R2 module which packages the CPU, PSRAM, and flash required while saving on cost and reducing complexity. To aid in development of the model and debugging, the board has a USB-C connector for programming, a JTAG/serial debugging header, a 4-pin serial interface for setting detection thresholds and reading the detections, and 2 RGB status LEDs for status and visual diagnostics.

For prototyping, the module was produced by JLCPCB and cost roughly $175 for 5 units fully assembled. This cost is heavily inflated because of the minimum order quantity of some components on the board, as well as the PCB production fees for low quantity orders. In addition, roughly half-way through the project, the US increased import tariffs from all countries including China to 145%, and then consequently decreased them back to 55%, drastically increasing the cost of production overhead of low-cost and low-quantity boards like this in general.

Research Paper & More Information

To learn more about this project, please check out the full research paper.